Posts Tagged Linux

Samba access problem with Mac OS X 10.6+

This is a problem I encountered when I upgraded Mac OS X from the version 10.5 (Leopard) to 10.6 (Snow Leopard). One of my friend also got a similar problem when she upgraded to the version 10.7 (Lion).

This issue was affecting the access to the network shares set up with Samba (version 3.0.24) on my D-Link DNS-323. For some reason, I wasn’t able to authenticate on the shares as soon as I upgraded to Snow Leopard!

Here is the error message I was getting:

After browsing a few forums on the web, I finally found a solution. 🙂

I simply had to change the security mode in the Samba configuration file (smb.conf) to read:

security = USER

Note that this property can be found under the [global] section.

For more information about the Samba security mode, please read the following article by Jack Wallen:

Understanding Samba security modes

S3 command failed if the time is not synced

This is already the second post about the s3sync ruby program. The first article was focused on monitoring s3sync with Zabbix.

I will talk on this one about an error I got when running the S3 synchronisation:

S3 command failed: list_bucket prefix /data max-keys 200 delimiter / With result 403 Forbidden S3 ERROR: # s3sync.rb:290:in `+': can't convert nil into Array (TypeError) from s3sync.rb:290:in `s3TreeRecurse' from s3sync.rb:346:in `main' from ./thread_generator.rb:79:in `call' from ./thread_generator.rb:79:in `initialize' from ./thread_generator.rb:76:in `new' from ./thread_generator.rb:76:in `initialize' from s3sync.rb:267:in `new' from s3sync.rb:267:in `main' from s3sync.rb:735

As you can see, this error is not very human-friendly! 😮 The only thing we know is that the S3 command failed because of the error can't convert nil into Array. It looks to me like an internal error within s3sync…

But after some investigation, it appears it is simply because the system date on the server is not correct. I cannot tell you how much time I spent on this one! 😯

Anyway, if you are doing automatic backups as describe on John Eberly’s blog, you need to add the following code at the top of your upload.sh script:

# update the system date /usr/sbin/ntpdate 3.uk.pool.ntp.org 2.uk.pool.ntp.org 1.uk.pool.ntp.org 0.uk.pool.ntp.org

NB: please find below the command lines I use to install ntpdate on a Debian server:

apt-get install ntpdate dpkg-reconfigure tzdata

Monitor s3sync with Zabbix

s3sync is a ruby program that easily transfers directories between a local directory and an S3 bucket:prefix. It behaves somewhat, but not precisely, like the rsync program.

I am using this tool to automatically backup the important data from Debian servers to Amazon S3. I am not going to explain here how to install s3sync as it is not the purpose of this article. However, you can read this very useful article from John Eberly’s blog: How I automated my backups to Amazon S3 using s3sync.

If you followed the steps from John Eberly’s post, you should have an upload.sh script and a crontab job which executes this script periodically.

From this point, here is what you need to do to monitor the success of the synchronisation with Zabbix:

- Add the following code at the end of your

upload.shscript:# print the exit code RETVAL=$? [ $RETVAL -eq 0 ] && echo "Synchronization succeed" [ $RETVAL -ne 0 ] && echo "Synchronization failed"

- Log the output of the cron script as follow:

30 2 * * sun /path/to/upload.sh > /var/log/s3sync.log 2>&1

- On Zabbix, create a new item which will check the existence of the sentence “Synchronization failed” in the file

/var/log/s3sync.log:

Item key:vfs.file.regmatch[/var/log/s3sync.log,Synchronization failed]

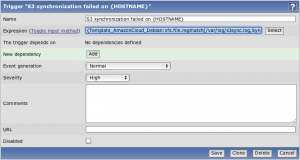

- Still on Zabbix, define a new trigger for the previously created item:

Trigger expression:{Template_AmazonCloud_Debian:vfs.file.regmatch[/var/log/s3sync.log,Synchronization failed].last(0)}=1

With these few steps, you should now receive Zabbix alerts when a backup on S3 fails. 🙂

Refresh GeoIP automatically

GeoIP is a very useful tool provided by MaxMind. It can determine which country, region, city, postal code, and area code the visitor is coming from in real-time. For more information, visit MaxMind website.

This tool is also coming with an Apache module allowing to redirect users depending on their location. For example, we could redirect all users from France to the French home page of a multi-language website, or we could block the traffic to users from a specific country.

To install this module on a Debian server, you simply need to run the following command:

apt-get install libapache2-mod-geoip

But, how does this module work? How does it know where the user comes from? 😯

It is actually quite simple: GeoIP is using a mapping file of IP address by country. On Debian, this file is stored in the folder /usr/share/GeoIP and is named GeoIP.dat.

However, the IP addresses are something which change all the time. So this file will get out-of-date very quickly. This is why MaxMind provides an updated file at the beginning of each month for free. To read the installation instructions, please click the following link: http://www.maxmind.com/app/installation

This is good and well, but who will remember or even have the time to update this file every month? And imagine if you have to do this on hundreds of servers?

The solution is to use a shell script which will download, extract and install the updated GeoIP file automatically once a month:

#!/bin/sh # Go in the GeoIP folder cd /usr/share/GeoIP # Remove the previous GeoIP file (if present) rm GeoIP.dat.gz # Download the new GeoIP file wget http://geolite.maxmind.com/download/geoip/database/GeoLiteCountry/GeoIP.dat.gz # Remove the previous GeoIP backup file rm GeoIP.dat.bak # Backup the existing GeoIP file mv GeoIP.dat GeoIP.dat.bak # Extract the new GeoIP file gunzip GeoIP.dat.gz # Change the permission of the GeoIP file chmod 644 GeoIP.dat # Reload Apache service apache2 reload

You can place this file in your root folder and set up the following crontab job:

0 0 3 * * /root/update_geoip.sh

This will execute the script automatically on the third day of every month.

Differences between Amazon instances

It has now been more than a year I am using Amazon Cloud for websites hosting. I have to admit that it works pretty well and I am quite happy about their services.

However, I got a strange problem a few days ago.

I was deploying an application on two different large instances of Amazon Cloud, one would be the UAT (User Acceptance Testing) server and the other one would be the Production server.

Strangely enough, the version running on the Production server was running slower than on the UAT server! 😯 Why?

At first, I thought I missed something with the server configurations. But no, everything was absolutely identical! So where does this difference of speed come from?

After some more investigation, I had the brilliant idea to execute the following command:

cat /proc/cpuinfo

On the Production server, this command was returning the following:

processor : 0 vendor_id : AuthenticAMD cpu family : 15 model : 65 model name : Dual-Core AMD Opteron(tm) Processor 2218 HE stepping : 3 cpu MHz : 2599.998 cache size : 1024 KB physical id : 0 siblings : 1 core id : 0 cpu cores : 1 fpu : yes fpu_exception : yes cpuid level : 1 wp : yes flags : fpu tsc msr pae mce cx8 apic mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt rdtscp lm 3dnowext 3dnow pni cx16 lahf_lm cmp_legacy svm extapic cr8_legacy bogomips : 5202.40 TLB size : 1024 4K pages clflush size : 64 cache_alignment : 64 address sizes : 40 bits physical, 48 bits virtual power management: ts fid vid ttp tm stc processor : 1 vendor_id : AuthenticAMD cpu family : 15 model : 65 model name : Dual-Core AMD Opteron(tm) Processor 2218 HE stepping : 3 cpu MHz : 2599.998 cache size : 1024 KB physical id : 1 siblings : 1 core id : 0 cpu cores : 1 fpu : yes fpu_exception : yes cpuid level : 1 wp : yes flags : fpu tsc msr pae mce cx8 apic mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt rdtscp lm 3dnowext 3dnow up pni cx16 lahf_lm cmp_legacy svm extapic cr8_legacy bogomips : 5202.40 TLB size : 1024 4K pages clflush size : 64 cache_alignment : 64 address sizes : 40 bits physical, 48 bits virtual power management: ts fid vid ttp tm stc

And on the UAT server, it was returning the following:

processor : 0 vendor_id : GenuineIntel cpu family : 6 model : 23 model name : Intel(R) Xeon(R) CPU E5430 @ 2.66GHz stepping : 10 cpu MHz : 2666.762 cache size : 6144 KB physical id : 0 siblings : 1 core id : 0 cpu cores : 1 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu de tsc msr pae cx8 apic sep cmov pat clflush acpi mmx fxsr sse sse2 ss ht syscall nx lm constant_tsc pni ssse3 cx16 lahf_lm bogomips : 5337.55 clflush size : 64 cache_alignment : 64 address sizes : 38 bits physical, 48 bits virtual power management: processor : 1 vendor_id : GenuineIntel cpu family : 6 model : 23 model name : Intel(R) Xeon(R) CPU E5430 @ 2.66GHz stepping : 10 cpu MHz : 2666.762 cache size : 6144 KB physical id : 1 siblings : 1 core id : 0 cpu cores : 1 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu de tsc msr pae cx8 apic sep cmov pat clflush acpi mmx fxsr sse sse2 ss ht syscall nx lm constant_tsc up pni ssse3 cx16 lahf_lm bogomips : 5337.55 clflush size : 64 cache_alignment : 64 address sizes : 38 bits physical, 48 bits virtual power management:

As you can see, these two servers actually don’t have the same CPU model name. But the biggest difference is probably the CPU MHz (2666.762 on UAT and 2599.998 on Production) and the cache size (6MB on UAT and only 1MB on Production).

So what does that mean? Two large instances of Amazon Cloud actually don’t have the same power?

The answer of this question is actually on the Amazon instance types description (http://aws.amazon.com/ec2/instance-types/) under the ‘Measuring Compute Resources’ chapter:

Amazon EC2 uses a variety of measures to provide each instance with a consistent and predictable amount of CPU capacity. In order to make it easy for developers to compare CPU capacity between different instance types, we have defined an Amazon EC2 Compute Unit. The amount of CPU that is allocated to a particular instance is expressed in terms of these EC2 Compute Units. We use several benchmarks and tests to manage the consistency and predictability of the performance of an EC2 Compute Unit. One EC2 Compute Unit provides the equivalent CPU capacity of a 1.0-1.2 GHz 2007 Opteron or 2007 Xeon processor.

In conclusion, two identical instance types of Amazon Cloud have the same number of EC2 Compute Units but because a EC2 Compute Unit is equivalent to a CPU capacity between 1.0Ghz and 1.2Ghz, the actual speed of the instance will be slightly different!

Mystery solved. 😉