Posts Tagged shell

Monitor s3sync with Zabbix

s3sync is a ruby program that easily transfers directories between a local directory and an S3 bucket:prefix. It behaves somewhat, but not precisely, like the rsync program.

I am using this tool to automatically backup the important data from Debian servers to Amazon S3. I am not going to explain here how to install s3sync as it is not the purpose of this article. However, you can read this very useful article from John Eberly’s blog: How I automated my backups to Amazon S3 using s3sync.

If you followed the steps from John Eberly’s post, you should have an upload.sh script and a crontab job which executes this script periodically.

From this point, here is what you need to do to monitor the success of the synchronisation with Zabbix:

- Add the following code at the end of your

upload.shscript:# print the exit code RETVAL=$? [ $RETVAL -eq 0 ] && echo "Synchronization succeed" [ $RETVAL -ne 0 ] && echo "Synchronization failed"

- Log the output of the cron script as follow:

30 2 * * sun /path/to/upload.sh > /var/log/s3sync.log 2>&1

- On Zabbix, create a new item which will check the existence of the sentence “Synchronization failed” in the file

/var/log/s3sync.log:

Item key:vfs.file.regmatch[/var/log/s3sync.log,Synchronization failed]

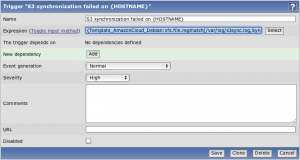

- Still on Zabbix, define a new trigger for the previously created item:

Trigger expression:{Template_AmazonCloud_Debian:vfs.file.regmatch[/var/log/s3sync.log,Synchronization failed].last(0)}=1

With these few steps, you should now receive Zabbix alerts when a backup on S3 fails. 🙂

Refresh GeoIP automatically

GeoIP is a very useful tool provided by MaxMind. It can determine which country, region, city, postal code, and area code the visitor is coming from in real-time. For more information, visit MaxMind website.

This tool is also coming with an Apache module allowing to redirect users depending on their location. For example, we could redirect all users from France to the French home page of a multi-language website, or we could block the traffic to users from a specific country.

To install this module on a Debian server, you simply need to run the following command:

apt-get install libapache2-mod-geoip

But, how does this module work? How does it know where the user comes from? 😯

It is actually quite simple: GeoIP is using a mapping file of IP address by country. On Debian, this file is stored in the folder /usr/share/GeoIP and is named GeoIP.dat.

However, the IP addresses are something which change all the time. So this file will get out-of-date very quickly. This is why MaxMind provides an updated file at the beginning of each month for free. To read the installation instructions, please click the following link: http://www.maxmind.com/app/installation

This is good and well, but who will remember or even have the time to update this file every month? And imagine if you have to do this on hundreds of servers?

The solution is to use a shell script which will download, extract and install the updated GeoIP file automatically once a month:

#!/bin/sh # Go in the GeoIP folder cd /usr/share/GeoIP # Remove the previous GeoIP file (if present) rm GeoIP.dat.gz # Download the new GeoIP file wget http://geolite.maxmind.com/download/geoip/database/GeoLiteCountry/GeoIP.dat.gz # Remove the previous GeoIP backup file rm GeoIP.dat.bak # Backup the existing GeoIP file mv GeoIP.dat GeoIP.dat.bak # Extract the new GeoIP file gunzip GeoIP.dat.gz # Change the permission of the GeoIP file chmod 644 GeoIP.dat # Reload Apache service apache2 reload

You can place this file in your root folder and set up the following crontab job:

0 0 3 * * /root/update_geoip.sh

This will execute the script automatically on the third day of every month.

run-parts gives an exec format error

I got a problem the other day with a Linux script I made.

Basically, the script was working perfectly fine if I executed it directly from the command line but whenever I tried to run it with run-parts it failed!

This is the error message it returned:

%prompt> run-parts --report /etc/cron.daily /etc/cron.daily/myscript: run-parts: failed to exec /etc/cron.daily/myscript: Exec format error run-parts: /etc/cron.daily/myscript exited with return code 1 %prompt>

Actually, the answer of this problem is quite simple! 🙂

I simply forgot the shebang on the first line of the script…

So, if you get the same error than me, make sure you have the following line at the beginning of your script:

#!/bin/sh

Segmentation fault at end of PHP script

This one is a very strange problem!

I created a little PHP script which connects to a MySQL database. Nothing amazing, except that I needed to run it via command line using the php command. What happened is that the script ran perfectly fine but, for some reason, it returned “Segmentation fault” when it reached the end of the file! 😯

It reminds me of the old days when I worked on C language, I used to see this not-very-useful message quite often. 😉

Anyway, why do we get a “Segmentation fault” at the end of a PHP script? And when I say ‘the end’, I mean after any other lines!

Looking around the web, I found the following bug report: http://bugs.php.net/bug.php?id=43823

This user seems to have the same problem than me except he is using a PostgreSQL database instead of a MySQL database.

Reading through the page, it looks like the problem comes from the order in which PHP loads his extensions! They explain that PostgreSQL should be loaded before cURL. If I apply the same logic, MySQL should then be loaded before cURL too.

To do that, we need to comment out the line extension=curl.so from the file /etc/php5/cli/conf.d/curl.ini:

# configuration for php CURL module #extension=curl.so

And add it to the file /etc/php5/cli/conf.d/mysql.ini AFTER the line extension=mysql.so:

# configuration for php MySQL module extension=mysql.so extension=curl.so

To be honest, I don’t really like this solution as it is a bit messy. I would prefer PHP developers to fix the root cause or at least have a way to change the order in which PHP loads the extensions.

NB: I was executing this PHP script on a Debian GNU/Linux 5.0 with Apache 2.2.9 and PHP 5.2.6.

ntpd process on D-Link DNS-313

During the configuration of a D-Link DNS-313 which is basically a NAS (Network-Attached Storage), I got a serious but easy-to-fix problem. 🙂

In order to get access to the box by command line, I installed the Fonz fun_plug. I then wanted to automatically synchronise the internal time with some NTP Pool Time Servers. But, for some reason, the version of the ntpd process provided with fun_plug is completely freezing the NAS. I wasn’t able to find the root cause of it, trust me, I tried everything I could think of!

Please also note that the same process is working perfectly fine on his brother, the D-Link DNS-323. As I said, I can’t explain why… 🙄

But there is a good news! The ntpd process is actually part of the D-Link DNS-313 firmware. And it is working fine! 😀 After double-checking, this process is however NOT part of the D-Link DNS-323 firmware. Why is that? Maybe D-Link got complaints from DNS-313 users and fixed it? Who knows…

Anyway, in order to get the ntpd process to work on the D-Link DNS-313, you need to replace the content of your ntpd startup script (/ffp/start/ntpd.sh) by the one below:

#!/ffp/bin/sh

# PROVIDE: ntpd

# REQUIRE: SERVERS

# BEFORE: LOGIN

. /ffp/etc/ffp.subr

name="ntpd"

command="/usr/sbin/ntpd"

ntpd_flags="-f /ffp/etc/ntpd.conf"

required_files="/ffp/etc/ntpd.conf"

start_cmd="ntpd_start"

ntpd_start()

{

# remove rtc and daylight cron jobs

crontab -l | grep -vw '/usr/sbin/daylight' | grep -vw '/usr/sbin/rtc' | crontab -

proc_start $command

}

run_rc_command "$1"